Summary: A general article on how video conferencing works and how it is being implemented.

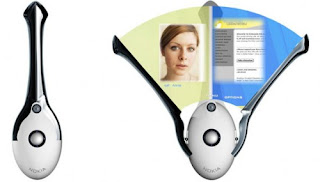

Doctors can use it to assess the best treatment for patients with life-threatening injuries who live miles away from a hospital. Teachers on all academic levels can use it to hold classes and to bring the world -- in the form of an exhibit at the Musee des Beaux Arts in Brussels, an archaeological dig in Tibet, or a class of South African third graders, to name a few possibilities -- before students' very eyes. A 12-year-old can use it to communicate with his grandmother in Indiana, and a bank executive can use it to unroll a new marketing strategy to her counterparts at the bank's Berlin, Buenos Aires, London and Singapore offices. It is video conferencing, and industry representatives project that it will be a $5 billion industry by 2002. Video conferencing is real-time audio and visual interaction between two or more parties from distant locations. There are a number of technologies on the market now that enable such communication and they vary greatly in cost, capability and the quality of the transmitted image.

How it works

Video conferencing systems today primarily transmit audio and visual communication one of two ways: Either through digital phone lines on an integrated services digital network (ISDN) or through a local area network (LAN) via the Internet. Henry Valentino, president of the Virginia-based Video Phone Store LLC, said that analog video phone technology, which utilizes standard telephone lines, is "basically dying."

"Everyone's been preaching about the explosion of video phone technology since AT&T introduced it in the '60s. The industry is finally taking off now because of cable modems and high-speed connection services," he added.

It is widely -- although not universally -- expected that the ISDN-based systems, which comply with industry standards known as H.320, will eventually be left in the dust of trail-blazing Internet systems, whose standards are called H.323.

James Whitlock, associate director of computing services at the University at Buffalo, said that ISDN connections will in the not-too-distant future be more problematic and limited than Internet access. "We can presume that the world will have Internet connectivity; we cannot presume that the world will have ISDN," he said.

Until those higher-speed connection services are refined, however, ISDN access will produce better image resolution for most users.

"Everyone's been preaching about the explosion of video phone technology since AT&T introduced it in the '60s. The industry is finally taking off now because of cable modems and high-speed connection services," he added.

It is widely -- although not universally -- expected that the ISDN-based systems, which comply with industry standards known as H.320, will eventually be left in the dust of trail-blazing Internet systems, whose standards are called H.323.

James Whitlock, associate director of computing services at the University at Buffalo, said that ISDN connections will in the not-too-distant future be more problematic and limited than Internet access. "We can presume that the world will have Internet connectivity; we cannot presume that the world will have ISDN," he said.

Until those higher-speed connection services are refined, however, ISDN access will produce better image resolution for most users.

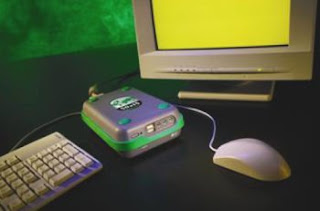

The necessary elements of any video conferencing system are:

A video capture card, or CODEC (for COmpression DECompression) card

A video camera

A microphone

Video conferencing software

And a service provider (in addition to a computer to house the system).

Consumers today can choose from desktop PC-based video conferencing systems and components or systems designed for group conferences in rooms specially fitted for such purposes.

Some video conferencing systems can support application sharing so that architects in different locations, for example, can simultaneously alter and collaborate on CAD drawings. Satellite downlinks are another feature available to those with greater technological resources.

A video capture card, or CODEC (for COmpression DECompression) card

A video camera

A microphone

Video conferencing software

And a service provider (in addition to a computer to house the system).

Consumers today can choose from desktop PC-based video conferencing systems and components or systems designed for group conferences in rooms specially fitted for such purposes.

Some video conferencing systems can support application sharing so that architects in different locations, for example, can simultaneously alter and collaborate on CAD drawings. Satellite downlinks are another feature available to those with greater technological resources.

Applications

Not ready to commit to such an investment just yet? You can test-drive the technology at several "public rooms" in Western New York, where consumers can rent the rooms and equipment to hold point-to-point or multipoint video conferences with anyone on the globe who can access a similar facility. Consumers can deal directly with public video conferencing rooms or with broker firms that establish affiliate locations all over the globe. Brokers like Proximity, which has more than 2,000 locations worldwide, and Affinity, with more than 800 sites, will conduct research to determine how clients can reach personal or business contacts in other locations. Most public video conferencing sites offer clients accommodations, including catering and videotaping to enhance the comfort and productivity of their sessions.

Francesca Mesiah, director of sales and client services at one such venue, the Advanced Training Center on Oak Street in Buffalo, described a conference during which doctors from around the world assembled at the center (and other international locations) to watch a video transmission of live heart surgery being performed at Buffalo General Hospital. ATC was then transformed into a medical laboratory as the doctors put the surgical techniques they had learned into practice on pigs' hearts within the facility's classrooms.

Another local video conferencing facility, recruitment firm MRI Sales Consultants of Buffalo Inc., uses the technology primarily as a medium for job interviews. General Manager Bob Artis said that corporations can save hundreds of dollars on travel and accommodation expenses for each prospective employee by conducting interviews through video conferencing.

WNED-TV also offers public video conferencing locally, as does Ronco Communications & Electronics Inc. Ronco's Kathleen Hardy said that her company serves a wide variety of clients who use video conferencing for legal applications, including depositions and expert testimony, as well as business, medical and educational purposes.

The broad potential for distance learning through video conferencing is of particular interest to instructors and technicians at the University at Buffalo. Lisa Stephens, associate director of distance learning, coordinates video conferences using the university's three classroom-based systems. The university also has at least three mobile desktop units that are used for smaller conferences, Stephens said.

The possibilities created by evolving video conferencing technologies are truly limitless, and will affect every aspect of our lives in the near future. Charles Rutstein, an analyst with Forrester Research, expects that we will see widespread use of video conferencing technologies in approximately five years. But even a self-described video conferencing "zealot" reminds us that there are situations in which old-fashioned interpersonal communication will never be obsolete.

"Sometimes people who are communicating still need to smell the fear in negotiating situations, to press the flesh," said James Whitlock, associate director of computing services at UB. "These technologies won't replace the need for travel or the need for live person-to-person communication. But they will supplement them and increase the effectiveness of our travel and our use of the telephone."

Francesca Mesiah, director of sales and client services at one such venue, the Advanced Training Center on Oak Street in Buffalo, described a conference during which doctors from around the world assembled at the center (and other international locations) to watch a video transmission of live heart surgery being performed at Buffalo General Hospital. ATC was then transformed into a medical laboratory as the doctors put the surgical techniques they had learned into practice on pigs' hearts within the facility's classrooms.

Another local video conferencing facility, recruitment firm MRI Sales Consultants of Buffalo Inc., uses the technology primarily as a medium for job interviews. General Manager Bob Artis said that corporations can save hundreds of dollars on travel and accommodation expenses for each prospective employee by conducting interviews through video conferencing.

WNED-TV also offers public video conferencing locally, as does Ronco Communications & Electronics Inc. Ronco's Kathleen Hardy said that her company serves a wide variety of clients who use video conferencing for legal applications, including depositions and expert testimony, as well as business, medical and educational purposes.

The broad potential for distance learning through video conferencing is of particular interest to instructors and technicians at the University at Buffalo. Lisa Stephens, associate director of distance learning, coordinates video conferences using the university's three classroom-based systems. The university also has at least three mobile desktop units that are used for smaller conferences, Stephens said.

The possibilities created by evolving video conferencing technologies are truly limitless, and will affect every aspect of our lives in the near future. Charles Rutstein, an analyst with Forrester Research, expects that we will see widespread use of video conferencing technologies in approximately five years. But even a self-described video conferencing "zealot" reminds us that there are situations in which old-fashioned interpersonal communication will never be obsolete.

"Sometimes people who are communicating still need to smell the fear in negotiating situations, to press the flesh," said James Whitlock, associate director of computing services at UB. "These technologies won't replace the need for travel or the need for live person-to-person communication. But they will supplement them and increase the effectiveness of our travel and our use of the telephone."

Source: © 1999 American City Business Journals Inc.